by Shane Coursen with assistance from Gemini

Introduction: The “Crocodile Mouth” and a Quiet Disappearance

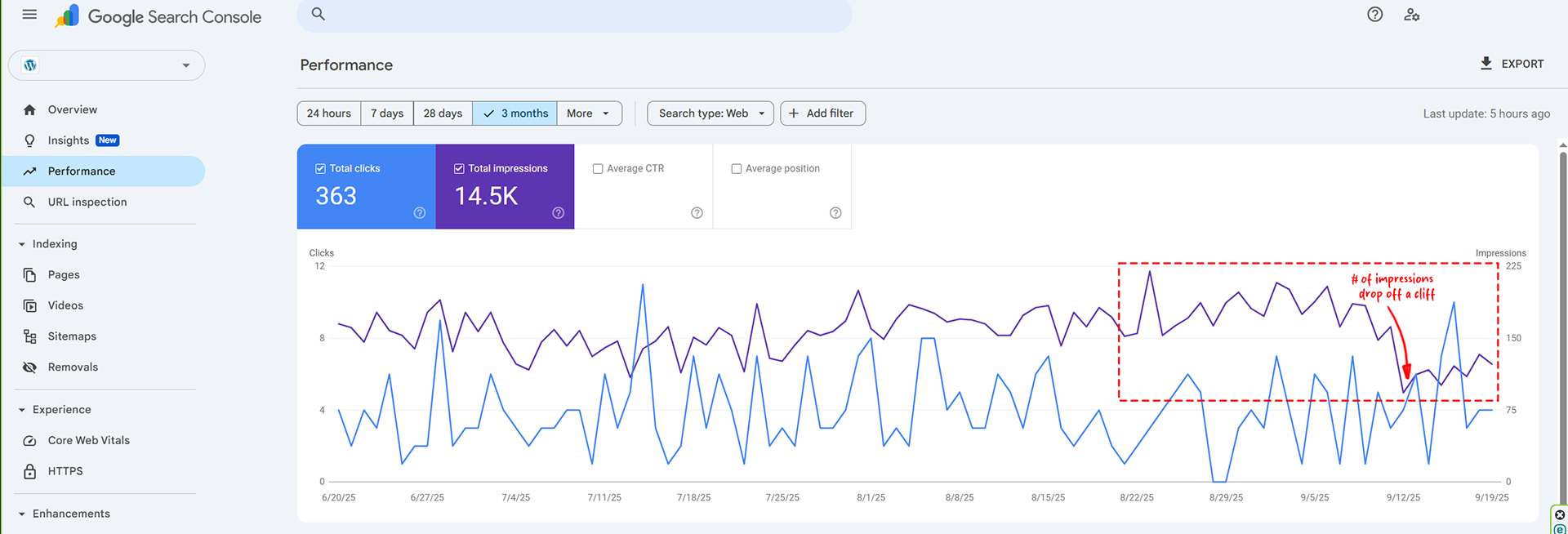

For over a year, SEOs have been staring at a “crocodile mouth” chart in their Google Search Console: impressions rising and clicks flattening. This was a direct consequence of AI Overviews, a phenomenon many called the “Great Decoupling.”

But while everyone was focused on the AI Overviews, Google also quietly made a technical change: disabling the &num=100 URL parameter. This seemingly small change has had a massive and unexpected ripple effect, revealing that much of the data that we had relied upon was flawed.

This article will argue that the “Great Decoupling” wasn’t just about AI Overviews. It was also a bot-driven mirage. By connecting these two distinct events, we will see a clearer picture of what’s really happening in search, and how to adapt our strategies. While this info is based on fact, some of this is still somewhat theoretical, but yes, that is the goal!

The Great Decoupling Narrative (What We Thought Was Happening)

Basically, an AI Overview is a super-quick, AI-generated summary of information that pops up right at the very top of a Google search results page. Think of it as Google’s way of giving you the answers you need without you having to click through a bunch of different websites.

These overviews appear in a special box, front and center on the SERP (which stands for search engine results page). They pull information from various sources on the web, synthesize it, and present it in a tidy package. They often include a list of key points and links to the websites they used, so you can always dig deeper if you want.

It’s a huge change for Google Search. AI Overviews makes it less about a list of links that you need to then click on and perform additional research, and more about providing instant, comprehensive answers.

The Zero-Click Problem

A zero-click search is a search query that a user performs on a search engine, like Google, where they can find the answer they need directly on the search results page and don’t click on any of the provided links to a website.

The Theory: AI Overviews and Zero-Click Searches

The rise of AI Overviews has accelerated the zero-click search phenomenon. The theory is that since AI Overviews are so effective at summarizing and synthesizing information, they can fully satisfy a user’s query without them ever needing to visit a website. For example, if you ask “what is a zero-click search?”, an AI Overview might pop up with a clear, concise definition. You’d get your answer instantly, and there’d be no need to click on an external link to learn more.

Impressions vs. Clicks

This leads to a situation where a website gets an impression but not a click. An impression is simply when a link to your website appears in the search results that a user sees. So, even if your content is used to create the AI Overview, your site still gets an impression because it’s cited as a source. However, because the user has already received the answer, they have no reason to click through to your website. This is what’s known as the “Great Decoupling”—where impressions might go up or stay the same, while organic clicks to websites go down.

The “Decoupling”

This phenomenon creates an “alligator chart” in Google Search Console, a term used by SEOs to describe the widening gap between impressions and clicks. The “jaws” of the alligator open up as impressions rise and clicks fall.

The Alligator Chart Explained

In the past, impressions and clicks on a Google Search Console performance chart typically moved in a similar pattern. When one went up, the other followed. The two lines on the graph were close together, like a closed mouth.

With AI Overviews, this relationship is broken. Your site’s content is used to create an AI Overview, which gives you an impression because your link is visible. However, because the user’s question is answered right there on the search results page, they don’t click through to your site. This causes a divergence in the data: the blue line for impressions rises, while the orange line for clicks either stagnates or falls.

This creates a visual representation of the “Great Decoupling”: two lines on a chart that were once close together are now moving in opposite directions, forming a shape that resembles the open jaws of an alligator. The larger the gap, the more pronounced the effect of AI Overviews on your traffic. It shows that while your site is gaining visibility and authority in Google’s eyes, it’s not necessarily translating into direct website traffic.

But here’s the kicker: an AI Overview often gives the user the full answer right then and there. They get all the info they need without ever leaving the Google results page. So, even though your site got an impression, the user has no reason to click through to your page. They’ve already found their answer. In Google Search Console, this results in a zero-click search, and your click data goes down, even as your impressions climb.

And so the big disconnect. Your site is being recognized as an authoritative source, but that doesn’t always translate into traffic. It means we have to start thinking about “success” not just in terms of clicks, but also in terms of brand visibility and authority.

The Unannounced Change: The Deprecation of &num=100

For years, the &num parameter was a URL trick that allowed you to change the number of search results displayed on a single Google search page. While the default is typically 10, by adding &num=100 to the end of a search query URL, you could get up to 100 results on one page.

This was a massive help for SEO tools and researchers because it made data collection much more efficient. Instead of making ten separate requests to get the top 100 results (which is what you have to do with the default 10-result pages), they could get all that data in a single request.

This saved a lot of time and computing power. However, Google has now deprecated this function, and adding the parameter to a search URL no longer works. This change has had a significant impact on SEO tools, increasing their operational costs and requiring them to re-engineer how they collect search data.

The most immediate fallout was the widespread failure of rank-tracking and SEO tools. For years, platforms like Semrush, Ahrefs, and others relied on the &num=100 parameter as a core part of their data-gathering infrastructure. This parameter provided a highly efficient way to crawl Google’s Search Engine Results Pages (SERPs) and report on keyword rankings.

When the parameter stopped working, tools experienced a sudden breakdown in their ability to collect comprehensive data.

The removal of the &num=100 parameter fundamentally altered the economics of data collection.

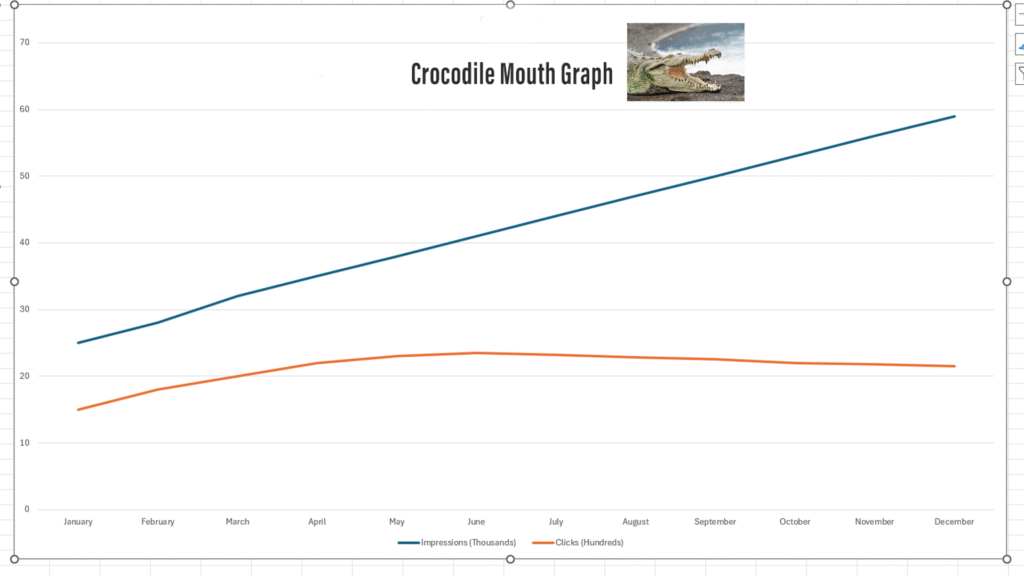

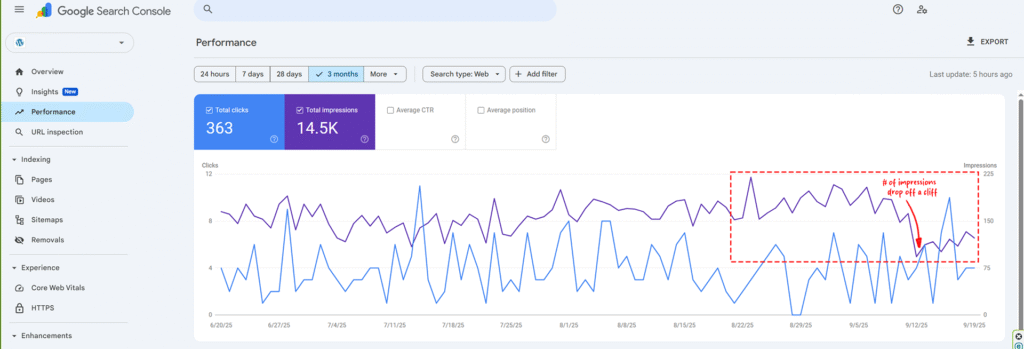

Perhaps the most surprising and revealing consequence was the sudden, sharp decline in desktop impressions reported in Google Search Console (GSC) for a vast number of websites. This drop coincided perfectly with the timing of the &num=100 parameter’s removal.

The prevailing theory is that for years, GSC’s desktop impression data was significantly “polluted” by bot traffic from SEO and AI tools that used the &num=100 parameter. When a bot loaded a single page with 100 results, it registered an impression for every single ranking on that page, even for positions like #99.

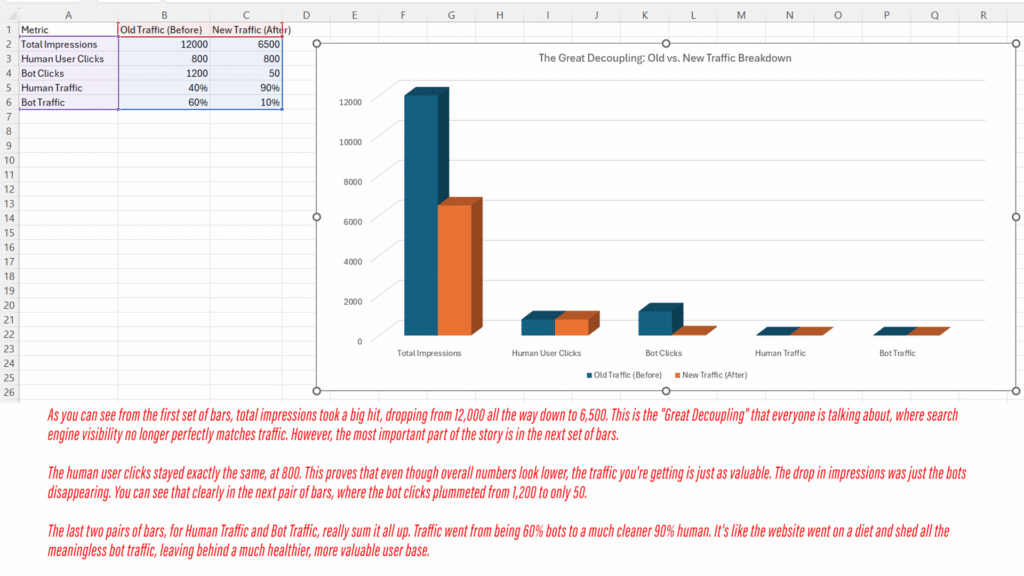

This discovery cast doubt on a widely discussed phenomenon known as “The Great Decoupling,” where GSC impressions had been steadily rising without a corresponding increase in clicks. While many had speculated this was due to the rise of AI Overviews, the removal of the &num=100 parameter suggests that a significant portion of that impression data was not from human users but from automated tools.

Why Oh Why did Google remove &num?

Protecting Infrastructure & Combating AI

One of the main reasons is to protect Google’s infrastructure from aggressive scraping. By forcing users and tools to make ten separate requests to get the same amount of data (100 results), the change significantly increases the operational costs and server load for third-party tools. This makes large-scale scraping far more expensive and difficult, which in turn helps Google conserve bandwidth and server resources. This is particularly relevant in the context of competing AI models and other data-hungry services that scrape Google’s index to train their own models. The move makes it harder for them to harvest vast amounts of data efficiently, thereby tightening Google’s control over its most valuable asset: its search index.

Cleaning Up Reporting Data

Another likely reason is to clean up Google’s own reporting data in Google Search Console (GSC). SEO professionals and tools that used the &num=100 parameter generated a massive number of “bot impressions” for results that were never seen by actual human users. With the parameter gone, these artificial impressions have disappeared, causing a sudden and sharp drop in desktop impressions for many sites. This makes GSC data more accurate and reflective of what real users actually see and interact with. The removal helps distinguish genuine human behavior from bot activity, providing a cleaner and more valuable dataset for website owners.

Silver Linings? (The Big Reveal)

With the bots no longer generating impressions for positions beyond the first page, GSC data is now considered more accurate and representative of actual user behavior. However, this has created a new baseline for reporting, forcing SEOs and businesses to re-evaluate their performance metrics and explain the sudden drop to clients who are accustomed to the inflated numbers.

The New SEO Playbook: From Rankings to Outcomes

The disappearance of the &num=100 parameter is a pivotal moment for SEO, signaling a move away from vanity metrics toward a new “playbook” focused on actual outcomes. The old way of doing things, which often involved obsessively tracking deep-rank positions and raw impression numbers, is becoming obsolete. These metrics were frequently inflated by bot activity and didn’t accurately reflect user behavior.

Prioritize the Top 20 & Beyond

The new reality demands we stop fixating on what doesn’t matter and prioritize the Top 20 rankings—the new sweet spot for SEO. This is where the overwhelming majority of human traffic and clicks occur. It’s also where your content is most likely to be seen and cited by AI models and to appear in featured snippets.

From Rankings to Results

Instead of celebrating a minor rank bump from position #50 to #40, the new goal is to celebrate an increase in organic traffic, leads, and sales. The mindset must shift from “How can I rank for this keyword?” to “How can ranking for this keyword drive business value?” Google’s change forces us to be more strategic and efficient with our efforts, ensuring every move we make contributes directly to measurable results. The ultimate goal is no longer just to rank, but to rank for things that truly matter.

Embrace “Generative Engine Optimization” (GEO):

Generative Engine Optimization (GEO) is the next evolution of SEO, focusing on creating content that is easily understood and utilized by large language models (LLMs) and other generative AI. This goes beyond traditional SEO, which was primarily for human consumption. It is about becoming a trusted information source for both humans and machines.

Key Strategies for GEO

1. Write Authoritative, Parsable Content: The foundation of GEO is well-structured, authoritative content. Think of it as writing for a highly efficient but literal reader. Use clear headings, bullet points, and numbered lists to break up information into digestible chunks. Define key terms early and use concise sentences. This makes it easier for an AI to quickly parse, summarize, and synthesize your information. The goal is to make your content the perfect source for an AI to pull from when answering a user’s query.

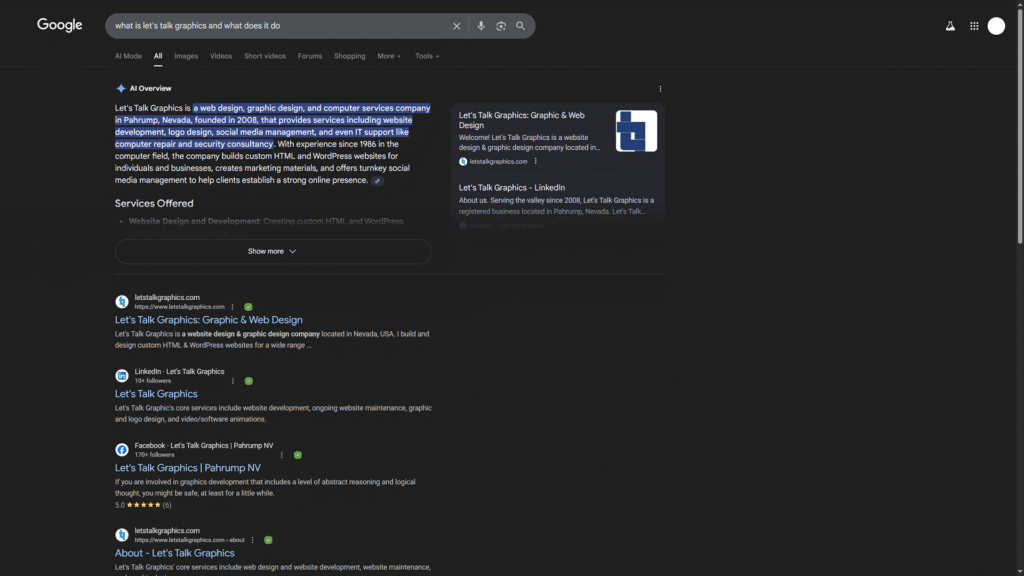

2. Build Brand Authority and Entity Recognition: Just as Google values reputable sources, so do AI models. The more your brand is recognized as an authority in its field, the more likely an AI will cite it. This involves building a strong online presence and generating E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) signals. When an AI “learns” about a topic, it looks for trusted entities. By consistently producing high-quality content that other authoritative sites reference, you build the kind of brand recognition that makes your site a go-to source for both search engines and AI. This is where your brand becomes a recognized entity in the knowledge graph, making it a reliable and trusted source for AI-powered responses.

Rethink Your Metrics:

In this new landscape, the old way of measuring success is obsolete. Rather than obsessing over vanity metrics like inflated impression counts and deep-rank positions, your focus must shift to tangible business outcomes: clicks, conversions, and revenue.

Google’s removal of the &num=100 parameter around mid-September 2025 created a “staircase effect” in analytics, with a sudden and sharp drop in impression numbers. This is a good thing! It’s because the data is now cleaner, no longer polluted by impressions from bots and scrapers. Your Google Search Console (GSC) data is now a more accurate reflection of genuine human user behavior.

Use GSC more intelligently. Look at the new, cleaner data to understand your true average position and click-through rates. While the raw numbers might not look as impressive, they are more valuable because they represent real user engagement. The “average position” metric will likely improve across the board, as it’s no longer being dragged down by bots that registered impressions for positions 50-100 without any clicks.

To avoid misinterpreting this change in future reports, it’s crucial to annotate your analytics. Mark a clear line in your Google Analytics and GSC dashboards on or around September 12, 2025 (when &num=100 was deprecated) and another for May 2024 (when AI Overviews began appearing in the U.S.). This will help you and your stakeholders understand why your historical data trends have changed, allowing you to establish a new, more accurate baseline for performance.

Conclusion

So, where do we go from here? The change didn’t break SEO; it fixed our data. It essentially pulled back the curtain and revealed that the “Great Decoupling” was more of a bot-driven illusion than a pure AI phenomenon.

The future of search isn’t about gaming an algorithm to get a higher ranking. It’s about creating content that is so valuable, authoritative, and trusted that it becomes the definitive answer, whether that answer is delivered by a human click or an AI Overview.

Feeling lost in the new search landscape? Let us help you develop an AI-first SEO strategy that focuses on real growth, not vanity metrics.